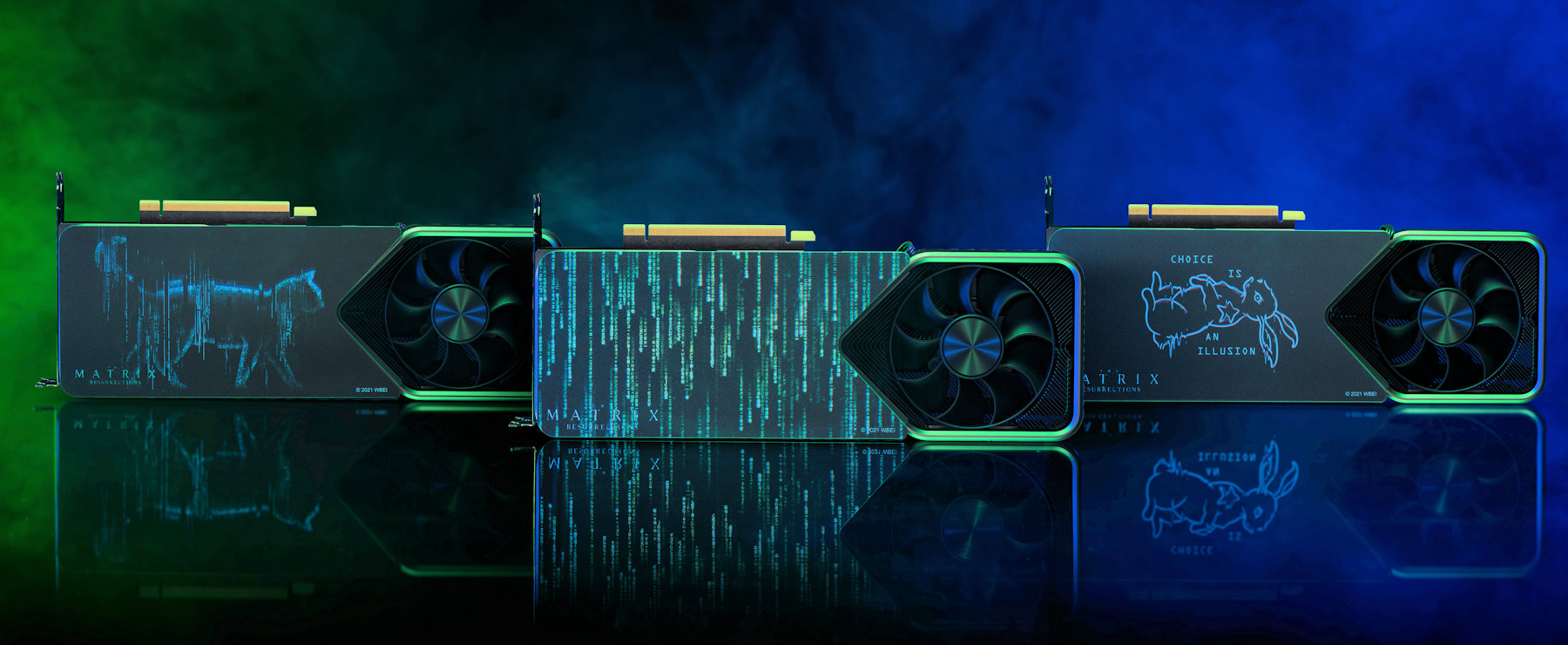

Nvidia contest offers three Matrix Resurrection-themed PCs with RTX 3080 Ti or 3090 GPUs | TechRadar

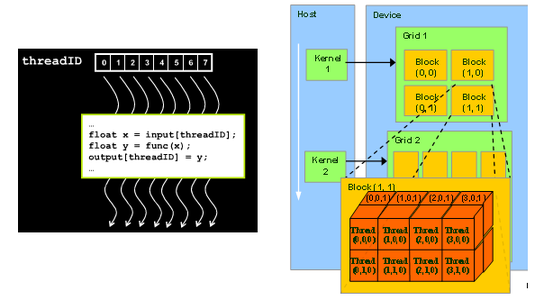

Matrix Operations on the GPU CIS 665: GPU Programming and Architecture TA: Joseph Kider. - ppt download

Whoa, NVIDIA Is Giving Away Badass Matrix-Themed GeForce RTX PCs And Custom GPU Backplates | HotHardware

Understanding The Efficiency Of GPU Algorithms For Matrix-Matrix Multiplication And Its Properties. - Pianalytix - Machine Learning

Figure 3 from Efficient Sparse Matrix Multiplication on GPU for Large Social Network Analysis | Semantic Scholar

![4 The advantages of matrix multiplication in GPU versus CPU [25] | Download Scientific Diagram 4 The advantages of matrix multiplication in GPU versus CPU [25] | Download Scientific Diagram](https://www.researchgate.net/profile/Snezhana-Pleshkova-2/publication/320674344/figure/fig3/AS:833078729650176@1575632855285/The-advantages-of-matrix-multiplication-in-GPU-versus-CPU-25.png)